Enterprises struggle with disruption. Their records of dealing with major disruptive technologies – such as the advent of the web, smartphone, and cloud – are generally not encouraging. Responses are often too narrow, reactive, and late. In our team’s close collaborations with the late Harvard Business School Professor Clayton Christensen and since we’ve seen a relatively small number of enterprises rise to the challenge and capture the upside of upheaval. It isn’t easy, but a playbook does exist.

AI promises at least as much disruption as those other technologies. It carries innumerable dangers: poor quality outputs, employee alienation, new forms of competition, regulatory crackdown, and many more. But it also offers tremendous promise, such as through hyper-tailored offerings, lightning-fast responsiveness, and step-changes in costs.

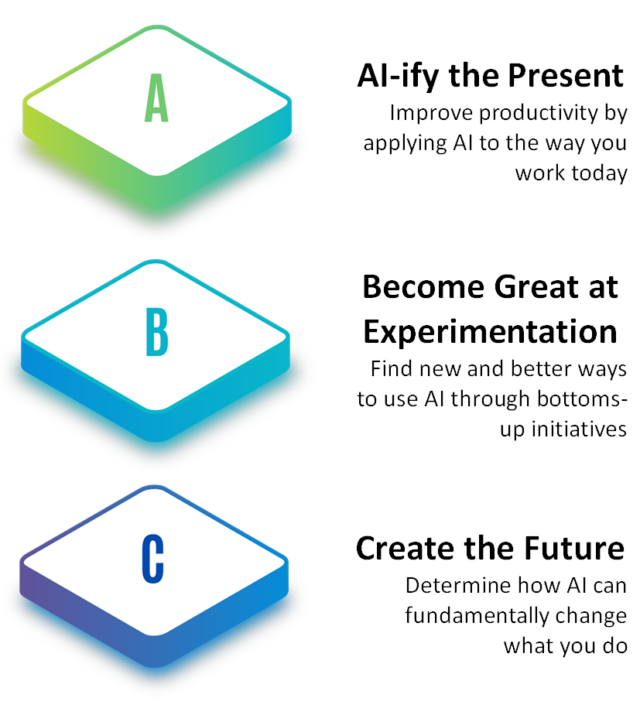

Today we take a close look at how to handle the disruption, drawing on lessons and case studies of organizations small and giant alike. Our approach lays out three routes to take to take, and you need to take all three. You also must pursue all of them at once. Unlike with other initiatives, you won’t be able to work over distinct time horizons with different levels of urgency, because the industry’s rate of change won’t allow you that luxury.

Thankfully, the approach is as simple as ABC:

AI-ify the Present

Much of current writing about AI deployment in enterprises focuses on productivity enhancement. McKinsey has estimated that the potential worldwide economic gain from AI-based productivity is $2.6 – $4.4 trillion across 63 examined use cases. 75% of McKinsey’s estimate lies in four areas: customer operations, marketing and sales, software engineering, and R&D. These are huge numbers, and they warrant immediate investigation. If you aren’t pursuing these productivity gains, your competitors are.

Methods to Use

The graveyard of failed IT initiatives is vast, but there are two key ways to avoid that fate here:

- Focus on Jobs to be Done –Start with understanding the full set of jobs that your users are trying to get done. We advocate combining Jobs with elements such as journey maps to create a holistic view.

- Deploy 360-Degree Systems Thinking – Look at all the stakeholders who have to be aligned for new solutions to work. What risks or adoption obstacles might each perceive? Who should your foothold users be to generate broader “pull” for AI systems rather than rely on organizational “push”? While these issues should be true for any tech system, they’re even more critical for AI given the need to think broadly about where data will come from, how it will be used, and how feedback learning will occur.

Watch Outs

We see organizations falling prey to four traps in their rush toward productivity enhancement:

- Lack of Human-Centered Design – Begin with users’ problems and work backwards to solutions, looking at all the levers (not just AI) at your disposal to create systems that fully address both the user’s situation and potential barriers to adopting new approaches.

- Not Blending Forms of AI – The best solutions aren’t cleanly divided by technology. They blend algorithmic AI as a foundation for determining actions with generative AI to tailor outputs or structure data inputs.

- Poor Data Quality – AI systems are only as good as the data they process, but some companies’ efforts aren’t chartered to re-think how that data is obtained. Consider the whole cycle of data, from where it originates to how granularity is preserved to how system outputs are blended with continuing contextual inputs so that the AI system doesn’t become just a data echo chamber.

- Haphazard Feedback and Learning – Machine Learning requires feedback, but it’s tempting to under-invest in this aspect of AI systems in the rush to deployment. Don’t. Give a lot of thought to how your solutions will not just be trained but continue to learn. The IT in AI systems is often widely available, whereas data and learning systems can be much more proprietary. This is where advantage may lie.

Become Great At Experimentation

If there’s one thing about AI that’s well agreed, it’s that we can’t be certain about what the future will hold. In situations of high uncertainty, it pays to be outstanding at fast and inexpensive experimentation. Experiments create ownable options and open up possibilities. Then you can scale up as you learn more.

Great experimentation does not mean just letting a thousand flowers bloom. That would suck up huge amounts of time, attention, and resources without producing highly usable outputs. Effective experiments are clearly defined, crafted with full awareness about resource limitations, and designed to create learnings quickly and cost-effectively. If your firm doesn’t do this well, the right time to build these muscles is now.

Methods to Use

We recommend a five-step process for becoming great at disciplined experimentation in a given arena:

- Establish the Knowns – First, establish what you know as fact and what you don’t know, including the X-factors that could upend your plans.

- Determine Hypotheses – From there, tease out the key hypotheses that you want to test. Keep in mind that some hypotheses might be more fundamental than others, and therefore might need to be tested earlier. These hypotheses may involve Jobs to be Done, but also other factors including what triggers or impedes behavior change, the suitability of AI outputs, how those outputs get integrated into workflows, what training data is most useful, and much more.

- Develop Tests – Then, consider how you might investigate each of these hypotheses using the scientific method. How can you break hypotheses into small, easily-testable components?

- Prioritize – Once you’ve designed your experiments, consider the time, cost, and risk associated with each. Together with the importance of each hypothesis, decide which experiments must come first vs. later. This will give you a priority list to adjust along the way.

- Capture Learnings – Finally, set up a system by which you can quickly capture learnings and adjust. Obtain tangible measurements from these experiments. Your system should include a way to decide which experiments to follow up with, know if more are needed, and determine when you’ve learned enough from a given test. Critically, it should include a mechanism to end experiments and new ideas.

Watch Outs

Consider the potential pitfalls of embracing experimentation:

- Pilot Hell – Pilots often sound alluring, but each one takes up time for all manner of staff. Control the pilots’ number and your overall resource commitments.

- Poor Governance – Ensure that risks are well-articulated and that there are clear guidelines for what systems may or may not be considered. Have risks and resource commitments agreed cross-functionally so you think about things from multiple perspectives.

- Focusing on the Wrong Data – Think in a focused way about what are your dependent and independent variables. At the same time, you also should understand the full system of use and monitor for unintended consequences.

- Difficulty Scaling – While it can be entirely appropriate to run experiments with systems that can never scale up with their current design, you should have a clear perspective on what would need to change to roll out a system more broadly.

Create The Future

Think about the big winners from the advent of the internet. Did Amazon or Netflix, for example, simply put what was offline into online form? No. It’s the same for smartphones – Uber and Meta, for instance, used the technology to fundamentally re-think what was possible. AI should be no different. Productivity gains and experimentation are absolutely appropriate to pursue, but the biggest wins lie in capturing new markets. These efforts may take a while to bear fruit, so the right time to get started is now.

Methods to Use

At a high-level, embrace this 6-step process:

- Start with the problems that AI can help to address. What relevant things is it really good at doing? For instance, where in your industry are there issues with unstructured data, untailored recommendations, costly customer service, long turnaround times on internal processes, etc.?

- Search for the big areas that have those problems. What customers or users provide the most potential gain through focusing on them? What trends are affecting their contexts? What are their full set of Jobs to be Done, not just the ones that AI solves for? As an analogy, think about Uber. A smartphone’s accessibility and location were essential to the service, but Uber also solved for other problems such as estimating what time you’ll arrive at a destination, which made the whole package more compelling.

- Understand the triggers and obstacles to adopting new solutions in those top use cases. These include what people need to stop doing in to order to start embracing something else.

- Assess the business dynamics of how high priority opportunities can be exploited. What are a diverse set of reasonable scenarios that provide context for what you can do? What capabilities will you need to thrive in those scenarios?

- Look broadly at the levers for creating full solutions that bring particular options to life. Take advantage of approaches such as the 10 Types of Innovation to consider how you can go beyond the AI product to find additional vectors for change.

- Find the footholds among customers or users for new approaches. Radical changes like those promised by AI don’t occur evenly; they start in footholds. Locate which ones are the best for you.

Watch Outs

Of course, all this is difficult to achieve. If it were easy, intense competition would make the potential gains much less attractive. Among the many possible pitfalls, pay attention to these:

- Pet Projects – Be certain to encourage vision and listen to how people imagine the future, but make their inputs as specific as possible and understand their inspirations.

- Paralysis – The flipside of over-investment in a few pet projects is having paralysis from considering too many options. Sort out what kinds of knowledge you have, build a manageable number of distinct scenarios, and determine what strategies will work best in which cases. Three to five truly diverging scenarios are usually enough to examine in order to be humble about our knowledge but decisive enough to get moving.

- Not Starting with Customers and Users – Be critical about what you really know. If you don’t fully grasp customers’ or users’ root motivations, then build that knowledge before you start solving for the wrong problems.

- Wrong Questions – People cannot tell you thumbs up or down for solutions that they hardly grasp. Understand their motivations and don’t focus too much on their reference points from today. Those will change.

- Not Linked to Action – Key business questions need to be in focus from the get-go, and scenarios need to be linked to how you will win within them.

However you decide to proceed, seize the moment. AI offers tremendous promise as well as potential peril. If you’re not taking the initiative, your rivals will be. This is the time to act.

More of this approach is featured in my book JOBS TO BE DONE: A Roadmap for Customer-Centered Innovation.

The Blake Project Can Help You Create A Bolder Competitive Future In The Jobs To Be Done Workshop

Branding Strategy Insider is a service of The Blake Project: A strategic brand consultancy specializing in Brand Research, Brand Strategy, Brand Growth and Brand Education